Poker Neural Network Example

Opponent Modeling and Exploitation in Poker Using Evolved Recurrent Neural Networks (2018) Xun Li. As a classic example of imperfect information games, poker, in particular, Heads-Up No-Limit Texas. Prepare data for neural network toolbox% There are two basic types of input vectors: those that occur concurrently% (at the same time, or in no particular time sequence), and those that. Artificial Neural Networks (ANN) are a mathematical construct that ties together a large number of simple elements, called neurons, each of which can make simple mathematical decisions. Together, the neurons can tackle complex problems and questions, and provide surprisingly accurate answers. A shallow neural network. About 200 machines across the country, called 'Texas Hold ‘Em Heads Up Poker,' use knowledge gained from billions of staged rounds of poker fed through neural networks, and the result is an.

Using neural networks has become standard in many areas of the industry. However, the challenge was to design a learning algorithm for the game of Poker, which is a very complex multi-player game with hidden information.

Building a Neural Network in Python

I’m Jose Portilla and I teach thousands of students on Udemy about Data Science and Programming and I also conduct in-person programming and data science training, for more info you can reach me at training AT pieriandata.com.

The code and data for this tutorial is at Springboard’s blog tutorials repository, if you want to follow along.

The most popular machine learning library for Python is SciKit Learn. The latest version (0.18) now has built in support for Neural Network models! In this article we will learn how Neural Networks work and how to implement them with the Python programming language and the latest version of SciKit-Learn! Basic understanding of Python is necessary to understand this article, and it would also be helpful (but not necessary) to have some experience with Sci-Kit Learn.

Neural Networks

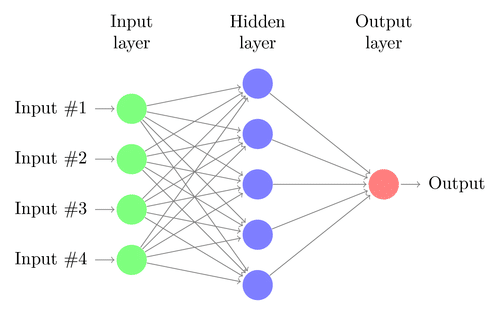

Neural Networks are a machine learning framework that attempts to mimic the learning pattern of natural biological neural networks: you can think of them as a crude approximation of what we assume the human mind is doing when it is learning. Biological neural networks have interconnected neurons with dendrites that receive inputs, then based on these inputs they produce an output signal through an axon to another neuron. We will try to mimic this process through the use of Artificial Neural Networks (ANN), which we will just refer to as neural networks from now on. Neural networks are the foundation of deep learning, a subset of machine learning that is responsible for some of the most exciting technological advances today! The process of creating a neural network in Python begins with the most basic form, a single perceptron. Let’s start by explaining the single perceptron!

The Perceptron

Let’s start our discussion by talking about the Perceptron! A perceptron has one or more inputs, a bias, an activation function, and a single output. The perceptron receives inputs, multiplies them by some weight, and then passes them into an activation function to produce an output. There are many possible activation functions to choose from, such as the logistic function, a trigonometric function, a step function etc. We must also make sure to add a bias to the perceptron, a constant weight outside of the inputs that allows us to achieve better fit for our predictive models. Check out the diagram below for a visualization of a perceptron:

Once we have the output we can compare it to a known label and adjust the weights accordingly (the weights usually start off with random initialization values). We keep repeating this process until we have reached a maximum number of allowed iterations, or an acceptable error rate.

To create a neural network, we simply begin to add layers of perceptrons together, creating a multi-layer perceptron model of a neural network. You’ll have an input layer which directly takes in your data and an output layer which will create the resulting outputs. Any layers in between are known as hidden layers because they don’t directly “see” the feature inputs within the data you feed in or the outputs. For a visualization of this check out the diagram below (source: Wikipedia).

Keep in mind that due to their nature, neural networks tend to work better on GPUs than on CPU. The sci-kit learn framework isn’t built for GPU optimization. If you want to continue using GPUs and distributed models, take a look at some other frameworks, such as Google’s open sourced TensorFlow.

Let’s move on to actually creating a neural network with Python and Sci-Kit Learn!

SciKit-Learn

In order to follow along with this tutorial, you’ll need to have the latest version of SciKit Learn (>0.18) installed! It is easily installable either through pip or conda, but you can reference the official installation documentation for complete details on this.

Anaconda and iPython Notebook

One easy way of getting SciKit-Learn and all of the tools you need to have to do this exercise is by using Anaconda’s iPython Notebook software. This tutorial will help you get started with these tools so you can build a neural network in Python within.

Data

For this analysis we will cover one of life’s most important topics – Wine! All joking aside, wine fraud is a very real thing. Let’s see if a Neural Network in Python can help with this problem! We will use the wine data set from the UCI Machine Learning Repository. It has various chemical features of different wines, all grown in the same region in Italy, but the data is labeled by three different possible cultivars. We will try to build a model that can classify what cultivar a wine belongs to based on its chemical features using Neural Networks. You can get the data here or find other free data sets here.

First let’s import the dataset! We’ll use the names feature of Pandas to make sure that the column names associated with the data come through.

Online Neural Network Example

| Cultivator | Alchol | Malic_Acid | Ash | |

|---|---|---|---|---|

| 0 | 1 | 14.23 | 1.71 | 2.43 |

| 1 | 1 | 13.20 | 1.78 | 2.14 |

| 2 | 1 | 13.16 | 2.36 | 2.67 |

| 3 | 1 | 14.37 | 1.95 | 2.50 |

| 4 | 1 | 13.24 | 2.59 | 2.87 |

| mean | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|

| Cultivator | 1.938202 | 1.00 | 1.0000 | 2.000 | 3.0000 | 3.00 |

| Alchol | 13.000618 | 11.03 | 12.3625 | 13.050 | 13.6775 | 14.83 |

| Malic_Acid | 2.336348 | 0.74 | 1.6025 | 1.865 | 3.0825 | 5.80 |

| Ash | 2.366517 | 1.36 | 2.2100 | 2.360 | 2.5575 | 3.23 |

| Alcalinity_of_Ash | 19.494944 | 10.60 | 17.2000 | 19.500 | 21.5000 | 30.00 |

| Magnesium | 99.741573 | 70.00 | 88.0000 | 98.000 | 107.0000 | 162.00 |

| Total_phenols | 2.295112 | 0.98 | 1.7425 | 2.355 | 2.8000 | 3.88 |

| Falvanoids | 2.029270 | 0.34 | 1.2050 | 2.135 | 2.8750 | 5.08 |

| Nonflavanoid_phenols | 0.361854 | 0.13 | 0.2700 | 0.340 | 0.4375 | 0.66 |

| Proanthocyanins | 1.590899 | 0.41 | 1.2500 | 1.555 | 1.9500 | 3.58 |

| Color_intensity | 5.058090 | 1.28 | 3.2200 | 4.690 | 6.2000 | 13.00 |

| Hue | 0.957449 | 0.48 | 0.7825 | 0.965 | 1.1200 | 1.71 |

| OD280 | 2.611685 | 1.27 | 1.9375 | 2.780 | 3.1700 | 4.00 |

| Proline | 746.893258 | 278.00 | 500.5000 | 673.500 | 985.0000 | 1680.00 |

Train Test Split

Let’s split our data into training and testing sets, this is done easily with SciKit Learn’s train_test_split function from model_selection:

Data Preprocessing

The neural network in Python may have difficulty converging before the maximum number of iterations allowed if the data is not normalized. Multi-layer Perceptron is sensitive to feature scaling, so it is highly recommended to scale your data. Note that you must apply the same scaling to the test set for meaningful results. There are a lot of different methods for normalization of data, we will use the built-in StandardScaler for standardization.

Training the model

Now it is time to train our model. SciKit Learn makes this incredibly easy, by using estimator objects. In this case we will import our estimator (the Multi-Layer Perceptron Classifier model) from the neural_network library of SciKit-Learn!

Next we create an instance of the model, there are a lot of parameters you can choose to define and customize here, we will only define the hidden_layer_sizes. For this parameter you pass in a tuple consisting of the number of neurons you want at each layer, where the nth entry in the tuple represents the number of neurons in the nth layer of the MLP model. There are many ways to choose these numbers, but for simplicity we will choose 3 layers with the same number of neurons as there are features in our data set along with 500 max iterations.

Now that the model has been made we can fit the training data to our model, remember that this data has already been processed and scaled:

You can see the output that shows the default values of the other parameters in the model. I encourage you to play around with them and discover what effects they have on your neural network in Python!

Predictions and Evaluation

Now that we have a model it is time to use it to get predictions! We can do this simply with the predict() method off of our fitted model:

Now we can use SciKit-Learn’s built in metrics such as a classification report and confusion matrix to evaluate how well our model performed:

Not bad! Looks like we only misclassified one bottle of wine in our test data! This is pretty good considering how few lines of code we had to write for our neural network in Python. The downside however to using a Multi-Layer Perceptron model is how difficult it is to interpret the model itself. The weights and biases won’t be easily interpretable in relation to which features are important to the model itself.

However, if you do want to extract the MLP weights and biases after training your model, you use its public attributes coefs_ and intercepts_.

coefs_ is a list of weight matrices, where weight matrix at index i represents the weights between layer i and layer i+1.

intercepts_ is a list of bias vectors, where the vector at index i represents the bias values added to layer i+1.

Conclusion

Hopefully you’ve enjoyed this brief discussion on Neural Networks! Try playing around with the number of hidden layers and neurons and see how they effect the results of your neural network in Python!

Want to learn more? You can check out my Python for Data Science and Machine Learning course on Udemy! Get it for 90% off at this link: https://www.udemy.com/python-for-data-science-and-machine-learning-bootcamp/

If you are looking for corporate in-person training, feel free to contact me at: training AT pieriandata.com.

Please feel free to follow along with the code here and leave comments below if you have any questions!

Code that accompanies this article can be downloaded here.

Back in 2015. Google released TensorFlow, the library that will change the field of Neural Networks and eventually make it mainstream. Not only that TensorFlow became popular for developing Neural Networks, it also enabled higher-level APIs to run on top of it. One of those APIs is Keras. Keras is written in Python and it is not supporting only TensorFlow. It is capable of running on top of CNTK and Theano. In this article, we are going to use it only in combination with TensorFlow, so if you need help installing TensorFlow or learning a bit about it you can check my previous article. There are many benefits of using Keras, and one of the main ones is certainly user-friendliness. API is easily understandable and pretty straight-forward. Another benefit is modularity. A Neural Network (model) can be observed either as a sequence or a graph of standalone, loosely coupled and fully-configurable modules. Finally, Keras is easily extendable.

Installation and Setup

As mentioned before, Keras is running on top of TensorFlow. So, in order for this library to work, you first need to install TensorFlow. Another thing I need to mention is that for the purposes of this article, I am using Windows 10 and Python 3.6. Also, I am using Spyder IDE for the development so examples in this article may variate for other operating systems and platforms. Since Keras is a Python library installation of it is pretty standard. You can use “native pip” and install it using this command:

Or if you are using Anaconda you can install Keras by issuing the command:

Alternatively, the installation process can be done by using Github source. Firstly, you would have to clone the code from the repository:

After that, you need to position the terminal in that folder and run the install command:

Sequential Model and Keras Layers

One of the major points for using Keras is that it is one user-friendly API. It has two types of models:

- Sequential model

- Model class used with functional API

Sequential model is probably the most used feature of Keras. Essentially it represents the array of Keras Layers. It is convenient for the fast building of different types of Neural Networks, just by adding layers to it. There are many types of Keras Layers, too. The most basic one and the one we are going to use in this article is called Dense. It has many options for setting the inputs, activation functions and so on. Apart from Dense, Keras API provides different types of layers for Convolutional Neural Networks, Recurrent Neural Networks, etc. This is out of the scope of this post, but we will cover it in fruther posts. So, let’s see how one can build a Neural Network using Sequential and Dense.

| fromkeras.modelsimportSequential |

| fromkeras.layersimportDense |

| model=Sequential() |

| model.add(Dense(3, input_dim=2, activation='relu')) |

| model.add(Dense(1, activation='softmax')) |

In this sample, we first imported the Sequential and Dense from Keras. Than we instantiated one object of the Sequential class. After that, we added one layer to the Neural Network using function add and Dense class. The first parameter in the Dense constructor is used to define a number of neurons in that layer. What is specific about this layer is that we used input_dim parameter. By doing so, we added additional input layer to our network with the number of neurons defined in input_dim parameter. Basically, by this one call, we added two layers. First one is input layer with two neurons, and the second one is the hidden layer with three neurons.

Another important parameter, as you may notice, is activation parameter. Using this parameter we define activation function for all neurons in a specific layer. Here we used ‘relu’ value, which indicates that neurons in this layer will use Rectifier activation function. Finally, we call add method of the Sequential object once again and add another layer. Because we are not using input_dim parameter one layer will be added, and since it is the last layer we are adding to our Neural Network it will also be the output layer of the network.

Iris Data Set Classification Problem

Like in the previous article, we will use Iris Data Set Classification Problem for this demonstration. Iris Data Set is famous dataset in the world of pattern recognition and it is considered to be “Hello World” example for machine learning classification problems. It was first introduced by Ronald Fisher, British statistician and botanist, back in 1936. In his paper The use of multiple measurements in taxonomic problems, he used data collected for three different classes of Iris plant: Iris setosa, Iris virginica, and Iris versicolor.

This dataset contains 50 instances for each class. What is interesting about it is that first class is linearly separable from the other two, but the latter two are not linearly separable from each other. Each instance has five attributes:

- Sepal length in cm

- Sepal width in cm

- Petal length in cm

- Petal width in cm

- Class (Iris setosa, Iris virginica, Iris versicolor)

In next chapter we will build Neural Network using Keras, that will be able to predict the class of the Iris flower based on the provided attributes.

Code

Keras programs have similar to the workflow of TensorFlow programs. We are going to follow this procedure:

- Import the dataset

- Prepare data for processing

- Create the model

- Training

- Evaluate accuracy of the model

- Predict results using the model

Training and evaluating processes are crucial for any Artificial Neural Network. These processes are usually done using two datasets, one for training and other for testing the accuracy of the trained network. In the real world, we will often get just one dataset and then we will split them into two separate datasets. For the training set, we usually use 80% of the data and another 20% we use to evaluate our model. This time this is already done for us. You can download training set and test set with code that accompanies this article from here.

However before we go any further, we need to import some libraries. Here is the list of the libraries that we need to import.

| # Importing libraries |

| fromkeras.modelsimportSequential |

| fromkeras.layersimportDense |

| fromkeras.utilsimportnp_utils |

| importnumpy |

| importpandasaspd |

As you can see we are importing Keras dependencies, NumPy and Pandas. NumPy is the fundamental package for scientific computing and Pandas provides easy to use data structures and data analysis tools.

After we imported libraries, we can proceed with importing the data and preparing it for the processing. We are going to use Pandas for importing data:

| # Import training dataset |

| training_dataset=pd.read_csv('iris_training.csv', names=COLUMN_NAMES, header=0) |

| train_x=training_dataset.iloc[:, 0:4].values |

| train_y=training_dataset.iloc[:, 4].values |

| # Import testing dataset |

| test_dataset=pd.read_csv('iris_test.csv', names=COLUMN_NAMES, header=0) |

| test_x=test_dataset.iloc[:, 0:4].values |

| test_y=test_dataset.iloc[:, 4].values |

Firstly, we used read_csv function to import the dataset into local variables, and then we separated inputs (train_x, test_x) and expected outputs (train_y, test_y) creating four separate matrixes. Here is how they look like:

Neural Network Algorithm Example

However, our data is not prepared for processing yet. If we take a look at our expected output values, we can notice that we have three values: 0, 1 and 2. Value 0 is used to represent Iris setosa, value 1 to represent Iris versicolor and value 2 to represent virginica. The good news about these values is that we didn’t get string values in the dataset. If you end up in that situation, you would need to use some kind of encoder so you can format data to something similar as we have in our current dataset. For this purpose, one can use LabelEncoderof sklearn library. Bad news about these values in the dataset is that they are not applicable to Sequential model. What we want to do is reshape the expected output from a vector that contains values for each class value to a matrix with a boolean for each class value. This is called one-hot encoding. In order to achieve this, we will use np_utils from the Keras library:

| # Encoding training dataset |

| encoding_train_y=np_utils.to_categorical(train_y) |

| # Encoding training dataset |

| encoding_test_y=np_utils.to_categorical(test_y) |

If you still have doubt what one-hot encoding is doing, observe image below. There are displayed train_y variable and encoding_train_y variable. Notice that first value in train_y is 2 and see the corresponding value for that row in encoding_train_y.

Once we imported and prepared the data we can create our model. We already know we need to do this by using Sequence and Dense class. So, let’s do it:

| # Creating a model |

| model=Sequential() |

| model.add(Dense(10, input_dim=4, activation='relu')) |

| model.add(Dense(10, activation='relu')) |

| model.add(Dense(3, activation='softmax')) |

| # Compiling model |

| model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) |

This time we are creating:

- one input layer with four nodes, because we are having four attributes in our input values

- two hidden layers with ten neurons each

- one output layer with three neurons, because we are having three output classes

In hidden layers, neurons use Rectifier activation function, while in output layer neurons use Softmax activation function (ensuring that output values are in the range of 0 and 1). After that, we compile our model, where we define our cost function and optimizer. In this instance, we will use Adam gradient descent optimization algorithm with a logarithmic cost function (called categorical_crossentropy in Keras).

Finally, we can train our network:

| # Training a model |

| model.fit(train_x, encoding_train_y, epochs=300, batch_size=10) |

And evaluate it:

Artificial Neural Network Examples

| # Evaluate the model |

| scores=model.evaluate(test_x, encoding_test_y) |

| print('nAccuracy: %.2f%%'% (scores[1]*100)) |

Neural Network Example Python

If we run this code, we will get these results:

Since we have built the same network on the same dataset as we did with TensorFlow in the previous article we got the same accuracy – 0.93. That is pretty good. After this, we can call our classifier using single data and get predictions for it.

Conclusion

Keras is one awesome API which makes building Artificial Neural Networks easier. It is quite easy getting used to it. In this article, we just scratched the surface of this API and in next posts, we will explore how we can implement different types of Neural Networks using this API.

Thanks for reading!

Neural Network Examples

This article is a part of Artificial Neural Networks Series, which you can check out here.

Neural Network C++ Code Example

Read more posts from the author at Rubik’s Code.